Understanding the Extension of Kubernetes APIs with Custom Resource Definition

Table of contents

Kubernetes has always been evolving, and this progress provides more flexibility and extensibility to developers and operations teams. one feature I came across is Custom Resource Definition (CRDs). this allows users to create their own new resource type within Kubernetes extending its API, this flexibility is key in an ecosystem as diverse as that of cloud-native applications. Developers can create a custom resource with all the rules just like your built-in Kubernetes resources such as Pods, Rbac, Replicasets, API Services, Then your Kubernetes API server leverages all the custom resources.

What are Custom Resource Definition (CRD)?

A resource is an endpoint in the Kubernetes API that stores a collection of API objects of a certain Kind and custom resource is an extension of the Kubernetes API that is not necessarily available in a default Kubernetes installation.

Custom Resource Definition is a kubernetes resource that allows you to define custom resource in the Kubernetes API.

What happens when you Create a Custom Resource Definition?

when you create the CRD definition kubernetes validates it against the schema defined in CRD. this includes checking the apiVersion, kind, metadata, spec and all rules specified in the openAPIV3Schema once its validated it is accepted and stored in the cluster etcd, the etcd storage ensures that the state of your custom resources is persistent and can be retrieved and managed across the cluster.

then the API server creates a new RESTful API endpoint for your custom resource. example if you create a CRD for a custom resource called pdfdocs in the alpharm.henry.com API group, the API server will create endpoints suh as:

/apis/alpharm.henry.com/v1/pdfdocs/apis/alpharm.henry.com/v1/namespaces/{namespace}/henrycustomresources/{name}

these endpoints can be used to perform CRUD operations'.

The resources are then monitored by controllers that are responsible for taking the information in the resource, once you have a custom controller or operator it manages the custom resource and then take appropriate to ensure that the desired state of your custom resources is maintained.

features to consider when CRD is created

Version:

v1it identifies the version of the custom resourceKind:

pdfdocthis is typically the name of the custom resource.spec.namesdefines how to describe your custom resource.metadata.namethis is the name of the CRDGroup:

alpharm.henry.comit is usually attached to the crd resource nameplural:

pdfdocsScope:

namespacedthis determines if the CR can be created in a namespace or globallySpec.names.kind: this determines the kind of CR to be created using CRD like deployment, cronjob.

Creating the Custom Resource Definition (CRD)

create a file with crd_manifest.yaml

apiVersion: apiextensions.k8s.io/v1

kind: CustomResourceDefinition

metadata:

name: pdfdocs.alpharm.henry.com #plural_name.the_group_name

spec:

group: alpharm.henry.com

names:

kind: pdfdoc

plural: pdfdocs

shortNames:

- pd

scope: Namespaced # this defines where the custom resources instances will be created, namespaced or globally

versions:

- name: v1

served: true #this determines if this version v1 is used and will be served, if false no one uses it

storage: true #this dertermines if this version will be stored in etcd

schema:

openAPIV3Schema: #lets create our resource here

type: object

description: 'APIVersion defines the versioned schema of this representation

of an object. Servers should convert recognized schemas to the latest

internal value, and may reject unrecognized values. More info: https://git.k8s.io/community/contributors/devel/api-conventions.md#resources'

properties:

apiVersion: #our typical kubernetes resource format apiversion, kind, metadata, spec

type: string

kind:

type: string

description: 'Kind is a string value representing the REST resource this

object represents. Servers may infer this from the endpoint the client

submits requests to. Cannot be updated. In CamelCase. More info: https://git.k8s.io/community/contributors/devel/api-conventions.md#types-kinds'

metadata:

type: object

spec:

type: object

properties:

documentName:

type: string #uses array, boolean, integer, number, object, string

text:

type: string

then create the Custom Resource Definitions

$ kubectl apply -f crd.yaml

customresourcedefinition.apiextensions.k8s.io/pdfdocs.alpharm.henry.com created

$ kubectl get crd

NAME CREATED AT

pdfdocs.alpharm.henry.com 2024-07-30T12:37:28Z

when we run this to get the documents

$ kubectl get pdfdocs

No resources found in default namespace.

this signify that the pdf is stored but there aren't any documents available in the pdfdocs

let's create a resource with our cluster resource definition

apiVersion: alpharm.henry.com/v1

kind: pdfdoc

metadata:

name: my-document

spec:

documentName: build

text: |

we are creating a document to test our first crd

so this can be fun to try out

then we create the resource

$ kubectl apply -f test-manifest.yaml

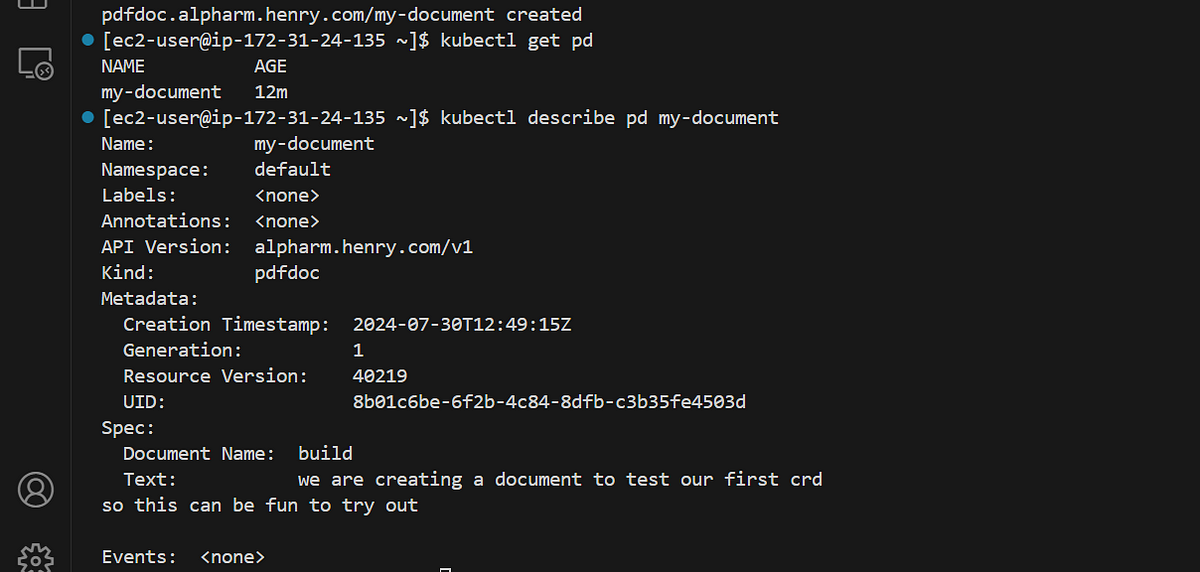

pdfdoc.alpharm.henry.com/my-document created

so we can view it

Custom resources consume storage space in the same way that ConfigMaps do. Creating too many custom resources may overload your API server’s storage space.

Now lets create Your Custom Controllers

Lets set up a Go environment for the controller create a directory and initalize a Go module:

mkdir k8s-controller

cd k8s-controller

go mod init k8s-controller

then you add the dependencies

go get k8s.io/apimachinery@v0.22.0 k8s.io/client-go@v0.22.0 sigs.k8s.io/controller-runtime@v0.9.0

next, we will write a file main.go and add this command.

package main

import (

"context"

"flag"

"log"

"os"

"os/signal"

"path/filepath"

"syscall"

metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

"k8s.io/apimachinery/pkg/apis/meta/v1/unstructured"

"k8s.io/apimachinery/pkg/runtime"

"k8s.io/apimachinery/pkg/runtime/schema"

"k8s.io/apimachinery/pkg/watch"

"k8s.io/client-go/dynamic"

"k8s.io/client-go/rest"

"k8s.io/client-go/tools/cache"

"k8s.io/client-go/tools/clientcmd"

"k8s.io/client-go/util/homedir"

)

func main() {

var kubeconfig string

if home := homedir.HomeDir(); home != "" {

kubeconfig = filepath.Join(home, ".kube", "config")

}

// Allow the kubeconfig file to be specified via a flag

flag.StringVar(&kubeconfig, "kubeconfig", kubeconfig, "absolute path to the kubeconfig file")

flag.Parse()

config, err := clientcmd.BuildConfigFromFlags("", kubeconfig)

if err != nil {

log.Println("Falling back to in-cluster config")

config, err = rest.InClusterConfig()

if err != nil {

log.Fatalf("Failed to get in-cluster config: %v", err)

}

}

dynClient, err := dynamic.NewForConfig(config)

if err != nil {

log.Fatalf("Failed to create dynamic client: %v", err)

}

pdfdoc := schema.GroupVersionResource{Group: "alpharm.henry.com", Version: "v1", Resource: "pdfdocs"}

informer := cache.NewSharedIndexInformer(

&cache.ListWatch{

ListFunc: func(options metav1.ListOptions) (runtime.Object, error) {

return dynClient.Resource(pdfdoc).Namespace("").List(context.Background(), options)

},

WatchFunc: func(options metav1.ListOptions) (watch.Interface, error) {

return dynClient.Resource(pdfdoc).Namespace("").Watch(context.Background(), options)

},

},

&unstructured.Unstructured{},

0,

cache.Indexers{},

)

informer.AddEventHandler(cache.ResourceEventHandlerFuncs{

AddFunc: func(obj interface{}) {

log.Println("Add event detected:", obj)

},

UpdateFunc: func(oldObj, newObj interface{}) {

log.Println("Update event detected:", newObj)

},

DeleteFunc: func(obj interface{}) {

log.Println("Delete event detected:", obj)

},

})

stop := make(chan struct{})

defer close(stop)

go informer.Run(stop)

if !cache.WaitForCacheSync(stop, informer.HasSynced) {

log.Fatalf("Timeout waiting for cache sync")

}

log.Println("Custom Resource Controller started successfully")

sigCh := make(chan os.Signal, 1)

signal.Notify(sigCh, syscall.SIGINT, syscall.SIGTERM)

<-sigCh

}

the file that was set up defines the path to your kubeconfig file and i set it at the default /.kube/config but users can also specify the kubeconfig file path. this Go program sets up a basic kubernetes custom resource controller that listens for changes to my custom resource type pdfdocs. it uses the client-go library to interact with kubernetes, with the ability to use your local kubeconfig file, allowing for updates, add, delete of events for our custom resource.

Dockerize the controller

we create a docker image from this Go program, create a file dockerfile

# Use an official Golang image to build the Go application

FROM golang:1.22.5 AS build

# Set the working directory inside the container

WORKDIR /app

# Copy the go.mod and go.sum files and download dependencies

COPY go.mod go.sum ./

RUN go mod download

# Copy the rest of the application source code

COPY . .

# Build the Go application

RUN CGO_ENABLED=0 GOOS=linux go build -a -installsuffix cgo -o k8s-controller .

# Use a minimal base image for the final container

FROM alpine:3.14

# Copy the built Go binary from the builder stage

COPY --from=build /app/k8s-controller /usr/local/bin/k8s-controller

# Set the entrypoint to the Go application

ENTRYPOINT ["/usr/local/bin/k8s-controller"]

then build the image and push to your repository

docker build -t henriksin1/k8s-controller:v1 .

#then run

docker push henriksin1/k8s-controller:v1

the docker repo :

https://hub.docker.com/repository/docker/henriksin1/k8s-controller

Set-up your Role-Based Access control

firstly, create your service account that your controller will use

apiVersion: v1

kind: ServiceAccount

metadata:

name: k8s-controller-sa

namespace: default

deploy the service account

kubectl apply -f 1-serviceaccout.yaml

next, create your cluster role and cluster role binding for the necessary permissions and a cluster role binding to bind the cluster role to the service account

clusterrole:

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: k8s-controller-role

rules:

- apiGroups: ["alpharm.henry.com"]

resources: ["pdfdocs"]

verbs: ["get", "list", "watch", "create", "update", "patch", "delete"]

deploy the clusterrole account

kubectl apply -f 2-clusterrole.yaml

ClusterRoleBinding:

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: k8s-controller-binding

subjects:

- kind: ServiceAccount

name: k8s-controller-sa

namespace: default

roleRef:

kind: ClusterRole

name: k8s-controller-role

apiGroup: rbac.authorization.k8s.io

deploy the clusterrolebinding resource

kubectl apply -f 3-clusterrolebinding.yaml

Create your deployment and specify the service account name for the image to use.

apiVersion: apps/v1

kind: Deployment

metadata:

name: k8s-controller

labels:

app: k8s-controller

spec:

replicas: 1

selector:

matchLabels:

app: k8s-controller

template:

metadata:

labels:

app: k8s-controller

spec:

serviceAccountName: k8s-controller-sa

containers:

- name: k8s-controller

image: henriksin1/k8s-controller:v1

args: []

deploy the image

kubectl apply -f 4-deployment.yaml

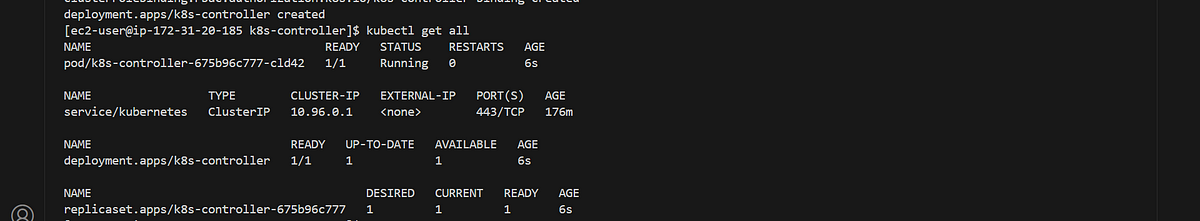

once all resources are running confirm

you can log the pods and check

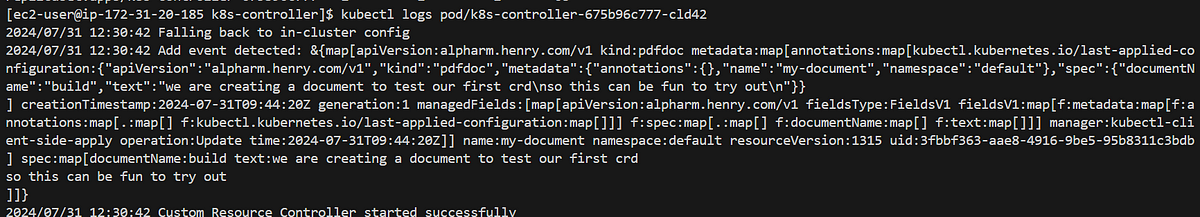

kubectl logs pod/k8s-controller-675b96c777-cld42

2024/07/31 12:30:42 Falling back to in-cluster config

2024/07/31 12:30:42 Add event detected: &{map[apiVersion:alpharm.henry.com/v1 kind:pdfdoc metadata:map[annotations:map[kubectl.kubernetes.io/last-applied-configuration:{"apiVersion":"alpharm.henry.com/v1","kind":"pdfdoc","metadata":{"annotations":{},"name":"my-document","namespace":"default"},"spec":{"documentName":"build","text":"we are creating a document to test our first crd\nso this can be fun to try out\n"}}

] creationTimestamp:2024-07-31T09:44:20Z generation:1 managedFields:[map[apiVersion:alpharm.henry.com/v1 fieldsType:FieldsV1 fieldsV1:map[f:metadata:map[f:annotations:map[.:map[] f:kubectl.kubernetes.io/last-applied-configuration:map[]]] f:spec:map[.:map[] f:documentName:map[] f:text:map[]]] manager:kubectl-client-side-apply operation:Update time:2024-07-31T09:44:20Z]] name:my-document namespace:default resourceVersion:1315 uid:3fbbf363-aae8-4916-9be5-95b8311c3bdb] spec:map[documentName:build text:we are creating a document to test our first crd

so this can be fun to try out

]]}

2024/07/31 12:30:42 Custom Resource Controller started successfully

now lets modify our pdfdocs

$ kubectl edit pd my-document

pdfdoc.alpharm.henry.com/my-document edited

[ec2-user@ip-172-31-20-185 k8s-controller]$ kubectl logs pod/k8s-controller-675b96c777-cld42

2024/07/31 12:30:42 Falling back to in-cluster config

2024/07/31 12:30:42 Add event detected: &{map[apiVersion:alpharm.henry.com/v1 kind:pdfdoc metadata:map[annotations:map[kubectl.kubernetes.io/last-applied-configuration:{"apiVersion":"alpharm.henry.com/v1","kind":"pdfdoc","metadata":{"annotations":{},"name":"my-document","namespace":"default"},"spec":{"documentName":"build","text":"we are creating a document to test our first crd\nso this can be fun to try out\n"}}

] creationTimestamp:2024-07-31T09:44:20Z generation:1 managedFields:[map[apiVersion:alpharm.henry.com/v1 fieldsType:FieldsV1 fieldsV1:map[f:metadata:map[f:annotations:map[.:map[] f:kubectl.kubernetes.io/last-applied-configuration:map[]]] f:spec:map[.:map[] f:documentName:map[] f:text:map[]]] manager:kubectl-client-side-apply operation:Update time:2024-07-31T09:44:20Z]] name:my-document namespace:default resourceVersion:1315 uid:3fbbf363-aae8-4916-9be5-95b8311c3bdb] spec:map[documentName:build text:we are creating a document to test our first crd

so this can be fun to try out

]]}

2024/07/31 12:30:42 Custom Resource Controller started successfully

2024/07/31 15:19:06 Update event detected: &{map[apiVersion:alpharm.henry.com/v1 kind:pdfdoc metadata:map[annotations:map[kubectl.kubernetes.io/last-applied-configuration:{"apiVersion":"alpharm.henry.com/v1","kind":"pdfdoc","metadata":{"annotations":{},"name":"my-document","namespace":"default"},"spec":{"documentName":"build","text":"we are creating a document to test our first crd\nso this can be fun to try out\n"}}

] creationTimestamp:2024-07-31T09:44:20Z generation:2 managedFields:[map[apiVersion:alpharm.henry.com/v1 fieldsType:FieldsV1 fieldsV1:map[f:metadata:map[f:annotations:map[.:map[] f:kubectl.kubernetes.io/last-applied-configuration:map[]]] f:spec:map[.:map[] f:documentName:map[]]] manager:kubectl-client-side-apply operation:Update time:2024-07-31T09:44:20Z] map[apiVersion:alpharm.henry.com/v1 fieldsType:FieldsV1 fieldsV1:map[f:spec:map[f:text:map[]]] manager:kubectl-edit operation:Update time:2024-07-31T15:19:06Z]] name:my-document namespace:default resourceVersion:29595 uid:3fbbf363-aae8-4916-9be5-95b8311c3bdb] spec:map[documentName:build text:this is a article about customresourcedefinition what do your think

so this can be fun to try out

Conclusion

Custom Resource Definitions (CRDs) empower Kubernetes users to extend the platform’s capabilities, enabling the management of custom application resources using Kubernetes’ declarative API. By leveraging CRDs, you can create custom controllers and operators to automate complex workflows and integrate seamlessly with external tools and services. Whether you’re managing databases, custom application configurations, or automated workflows, CRDs provide the flexibility and power to tailor Kubernetes to your specific needs.

Feel free to experiment with CRDs and explore how they can simplify and enhance the management of your applications within Kubernetes.

see you all next time

If you found this blog insightful and dive deeper into topics like AWS cloud, Kubernetes, and cloud native projects or anything related, check out my linkedin page: https://www.linkedin.com/in/emeka-henry-uzowulu-38900088/

and also, my github: https://github.com/A-LPHARM/

feel free to share your thoughts and ask any questions

for references: